Starting a visual artistry journey: working with shaders

I love the way fluids move, you can probably relate to that. The beautiful swirling patterns you see when washing the dishes or those dramatic clouds over the ocean give life a bit of extra meaning. That is one of the reasons why I studied maths at university and definitely influenced my choice to do a PhD in atmospheric fluid dynamics. How can we capture this beauty in a computer though?

There are two philosophies when it comes to representing a fluid using code. One philosophy is simulation, which is what I have been doing so far. You take your best shot at describing the physics governing the fluid and then solve the resulting equations as accurately as you can in your computer. The other is to focus on the effect you create without worrying so much about predictive power. This second philosophy is that of the visual artist.

To explore this topic, I decided to use Unity. Unity is a game development engine with a strong online community. You create games in Unity primarily by coding in C#. My first project was a 3-body problem simulation.

To control how things look in Unity, shaders are used. Shaders are code that runs on the graphical processing unit (GPU). They are usually used to colour or add effects to objects in the game.

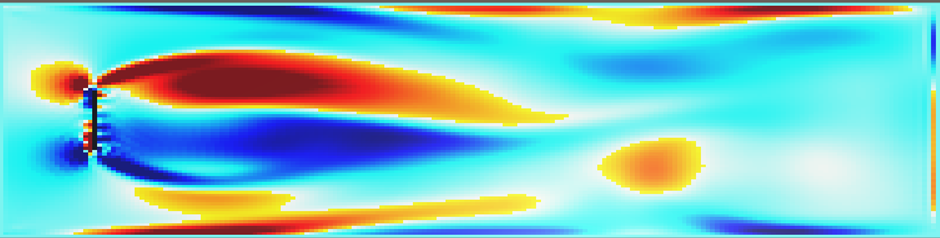

I became attracted to shaders since they can help achieve extraordinary simulation speeds. I had spent quite some time creating a Lattice-Boltzmann fluid simulator in Unity. The result wasn’t bad but it ran on the CPU and I knew that with a GPU my code could be orders of magnitude faster.

The reason using a GPU is faster is it allows for parallelisation. If your task can be made parallel then it will be faster if you use the GPU. Imagine your CPU is a chef. If he wants to peel 100 potatoes, he will have to peel the potatoes one by one. A GPU is like many CPUs that can each only do basic things, let’s think of them as kitchen porters. Each porter could be given a potato and the job would be done in the time it takes for one of them to peel it. This is why the speed-up times can be so crazy, particularly for a cellular automata method like Lattice-Boltzmann.

The quest for shaders

The problem is, shaders are notoriously obscure in Unity. The language they are written in, high level shader language (HLSL), has very limited auto-complete support in visual studio (the default unity IDE) and there is no way to print statements to debug a shader. Instead, you have to carefully output colours and trace back where in the code the problem began.

Coding shaders is therefore frustrating. This has caused a feedback loop where little support or content is made about coding them and people look for alternatives like shader graph. Shader graph uses a visual coding interface instead of lines of written code and I gather it’s an easier way to go for pretty much everything except simulations.

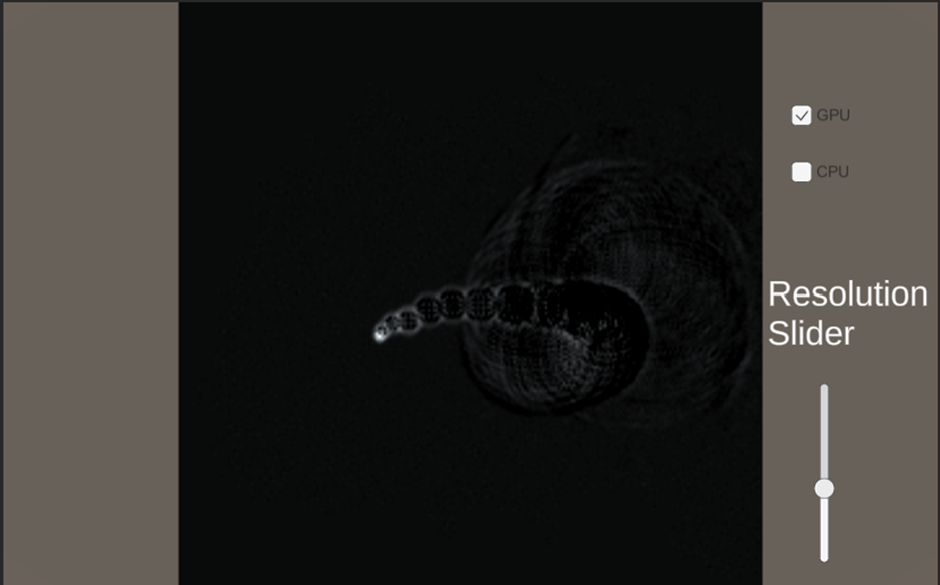

After a few videos about shaders it became clear what I needed was a compute shader. After two fantastic videos on the wave equation (a lot of resources are linked in the bibliography section) I could see that they were actually quite easy to add into my projects. The snag was that they don’t work with webGL, the platform that allows me to publish simulations to this website. For you to play with what I made I would have to learn regular shaders and hack my way around the issue. The things we do for love.

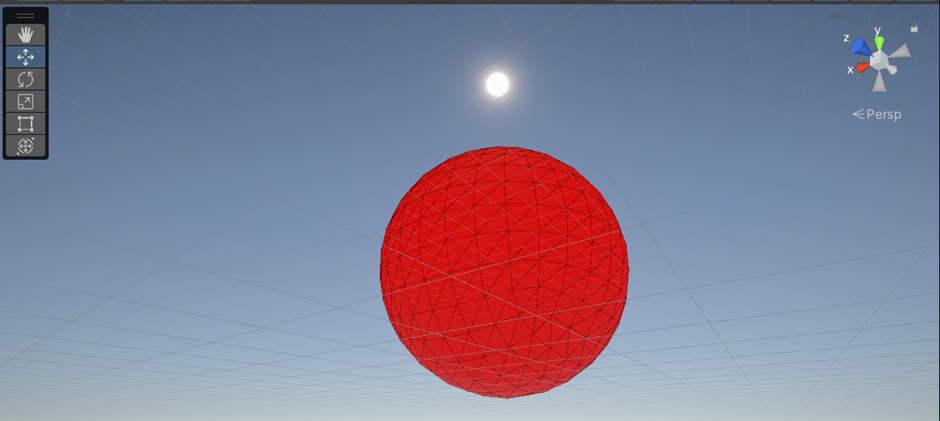

After following a basic tutorial on shaders, I wrote my first unlit shader.

Yep, it just makes the object red. It looks like I’m evolving backwards from these screenshots but I am learning. This red colour was achieved by writing a fragment shader function.

Objects in unity are made out of vertices and fragments. Vertices are the edges of the wireframe used to create the object. For example, the next picture is the wireframe used to create a sphere. The fragments are the pixels which colour all those triangles inside the frame.

So, all I did was write a function that when passed any of the pixels that make up the sphere on the screen, it says make this red!

I started the official Unity shaders course but realised that it was a bit too code light to be useful right away. Eventually I found Alan Zucconi’s fantastic blog.

In his blogs on using shaders for simulations he showed how to use a ping-pong method to do calculations in a shader. This is definitely a hack compared to compute shaders and as you play with the example below you will see artefacts coming from the size of the batches sent to the GPU, but it works and it is faster than the CPU.

Watch this space.

Resources I found helpful:

Freya’s introduction to shaders: https://www.youtube.com/watch?v=kfM-yu0iQBk&ab_channel=FreyaHolm%C3%A9r

Overview video on compute shaders: https://www.youtube.com/watch?v=BrZ4pWwkpto&t=6s&ab_channel=GameDevGuide

Lightbulb moment video actually using compute shaders: https://www.youtube.com/watch?v=4CNad5V9wD8&ab_channel=SpontaneousSimulations

Super basic tutorial on shaders, you make a red texture: https://www.youtube.com/watch?v=W-gZAFM9d9E&t=1s&ab_channel=NikLever

Official Unity introduction to shaders course: https://learn.unity.com/tutorial/get-started-with-shaders-and-materials?uv=2020.3&pathwayId=61a65568edbc2a00206076dd&missionId=619f9b6cedbc2a39aabd7b1e

Alan Zucconi’s fantastic blog on using shaders for simulations (for when compute shaders won’t work on your platform of choice): https://www.alanzucconi.com/2016/03/02/shaders-for-simulations/

Comments ()